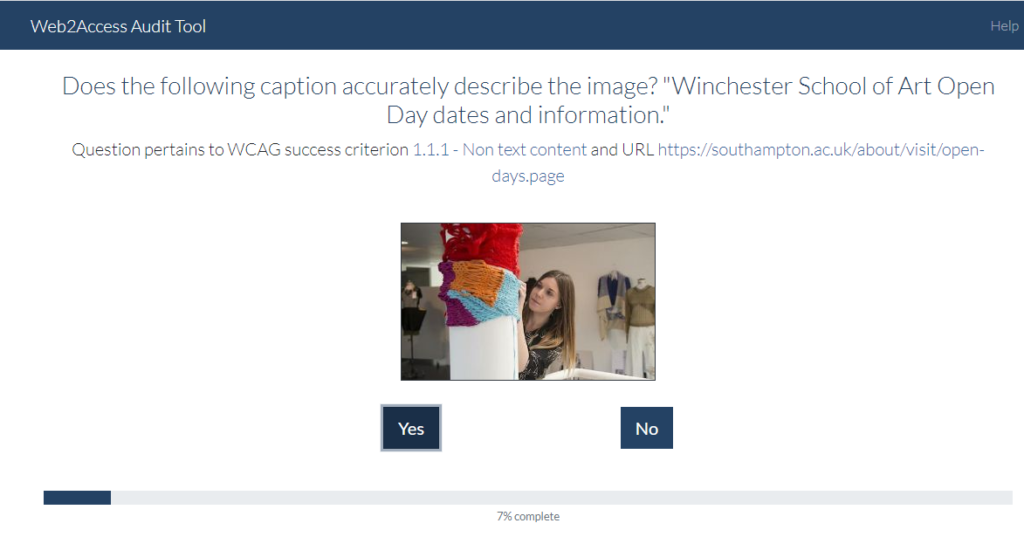

A Group Design Project has supported our intention to improve some automated web accessibility checks on our Web2Access review system. The project has resulted in a way of making sure alternative text used to describe images on web pages is accurate.

Accurate and simple descriptions are important for those who use screen readers, such as individuals with visual impairments. The ‘alt text’ that is used to describe an image is usually added by the author of a web page, but in recent years this process has often been automated. The results have been varied and do not necessarily accurately describe the image.

As part of the WCAG 2.1. checks for alt tags an additional check has been added using a pretrained network and object detection (Mobile Nets and COCO-SSD in Tensorflow ). Initially the automated checker uses a review of the alt tags by the Pa11y checker. Then the additional check of the text resulting from the image classifi cation is compared to the actual descriptive text in the ‘img alt’ attribute for each image in a web page. If there is a successful match between the text, the automated review is accepted, but if none of the words correspond as a required description, a visual appraisal system is used to present the findings to the accessibility reviewer. This process acts as a double check and ensures issues can be flagged to the developer.

A similar process has been used for visual overlaps of content and it is intended that in the future titles of hypertext links could also be checked to ensure they accurately describe where the user would be sent if activated, not just that they say the already automately checked ‘click here’ or ‘more’ links or are a broken link.

In the last few months the results have been beta tested and integrated into the Web2Access digital accessibility review system by the ECS Accessibility team. The output can now be viewed as part of an Accessibility Statement as required by law since September 2018 for public sector websites.